5 Examples of Biased Survey Questions and Why You Should Avoid Them

Biased survey questions are set up in a way that either

- Lead the respondent intentionally down a path to a certain answer, or

- Are phrased in a manner that can be confusing to them, leading to unclear responses.

Whilst it’s rarer these days to find organizations intentionally trying to skew results, it’s still common for companies to have survey bias. This is simply because they don’t have the right skills and experience to put together good-quality surveys.

Furthermore, survey bias is dying out also largely because customers are more aware of unethical practices and companies will be quickly called out for it. With the current cancel culture, it's best to avoid

Asking biased survey questions can actually be very dangerous for the company. Based on the survey answer, they’ll jump to the wrong conclusions, take incorrect actions and take away the wrong insights from the responses.

You’ll want to avoid biased survey questions at all costs, it's vital that all feedback you get is impartial and honest.

By reading this article, you'll learn:

- Different types of biased survey questions

- Their different consequences for your business (whether you've made the survey biased intentionally or not!)

- 5 Different types of surveys questions to avoid

- Examples explaining why they're bad

1. Leading questions

Leading questions are the most obvious examples of bias to spot, as they make it very clear that there is a “correct” answer the question is leading you towards. These will always result in false information as the respondent was never given the option for an honest response to begin with.

Examples of leading questions

“How amazing was your experience with our customer service team?”

You can see this question is set up in a way that you’ve assumed already you thought the customer service team was amazing, you’ve left no room for another answer. The customer is now obliged to rate the customer service team on a 5-point scale of how amazing they were.

“What problems did you have with the launch of this new product?”

Again, this question assumes that there was something wrong in the first place and will have the customer looking for problems in their answer.

“Would you be worried if we discontinued this product line?”

This example is using emotional language to lead a customer, rather than making assumptions as the first two questions. It suggests to the respondent that they should be worried, simply because the emotional language is used.

Consequences of leading questions

- Your survey responses will be significantly skewed

- You won’t gain any new results or insights

- Customers may think you’re deliberately trying to lead them into a certain response which in turn could cause them to leave a negative review or accuse you of leading surveys on social media.

What to do instead

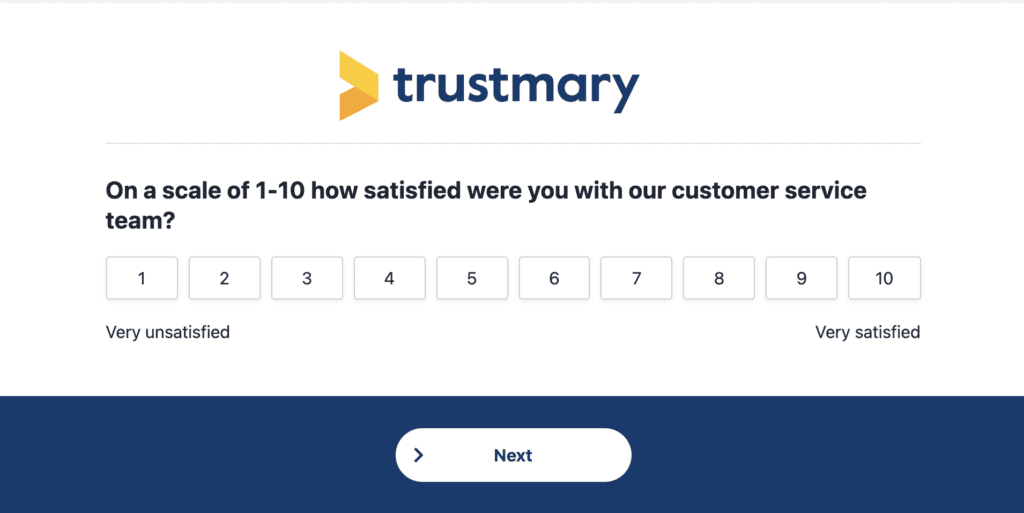

Make sure the questions you give the customer have options, they need to be able to choose objectively. For example, instead of asking how amazing a customer experience was, you should ask:

“On a scale of 1-10 how satisfied were you with our customer service team”

This doesn’t lead them into a response and gives the customer a chance to provide a useful and easily measurable rating.

2. Vague or ambiguous questions

This is a type of question many organizations can fall victim to without realizing it. On the surface these questions may look honest and harmless enough, but their vague nature can actually do more harm than good, confusing a customer into a poor response.

Examples of vague survey questions

“How do we compare to our competitors?

This question for example is far too broad. Maybe your customers have never actually used your competitor's products so can’t say?

Or maybe it’s not something your customers have ever thought about before and they decide to start researching your competitors? You also haven’t given a benchmark to compare against, do you mean your product? Level of customer service? Price? You’re leaving the choice entirely in the customers hands.

“Do you think your family members would like product X”

Here the mistake is using language like “Think” which can get different reactions from different people. Other similar words would be examples like “Feel” or “Expect”. You’re asking the customer to give broad, subjective answers and respond emotionally. Your customers might also have no idea how their family members would react to the product and probably won’t be inclined to go and ask them to find out, and instead will abandon your survey.

Consequences

- If you’re not specific enough with your question, chances are that the range of answers you get will be so wildly different to each other it’ll make any data you collect useless. Vague questions spark different thoughts for different people, you want to keep you questions focused and specific.

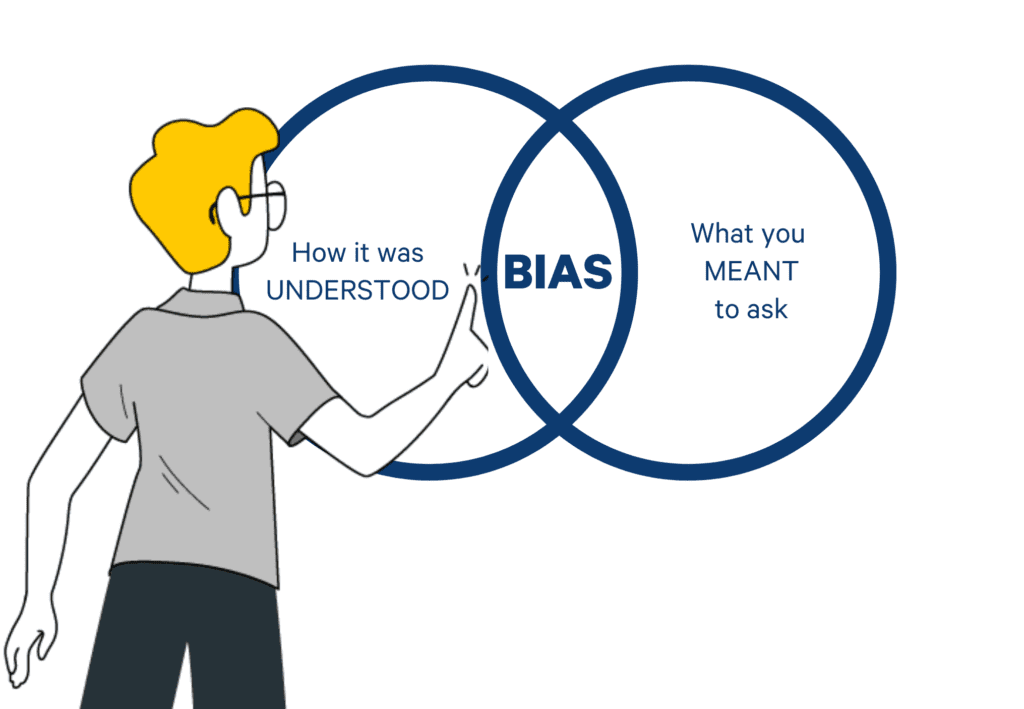

- The customer won’t always interpret the question the way you expect them to, skewing your final results.

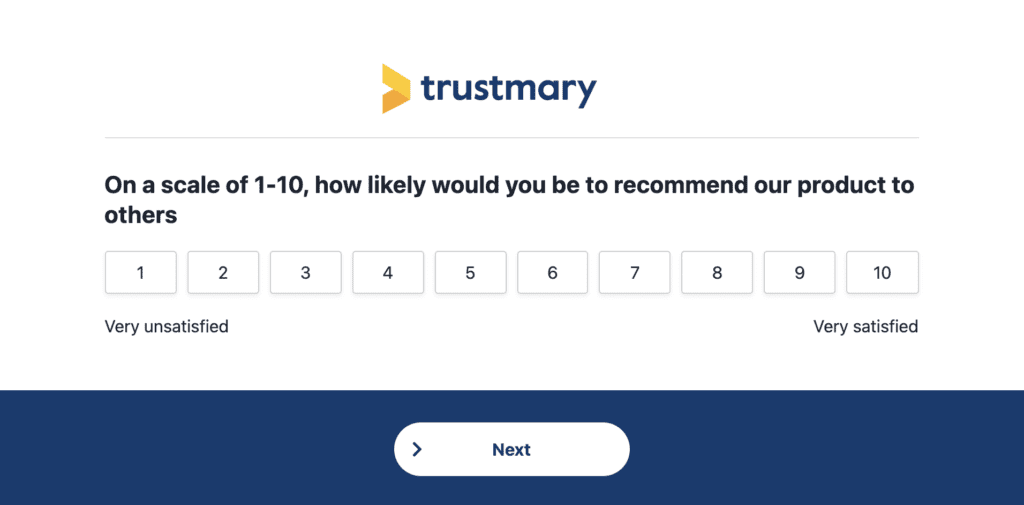

What to do instead

Be much more specific with your customer feedback questions and get to the point. Instead of asking your customers if they think they might recommend something instead ask:

“On a scale of 1-10 how likely would you be to recommend our product to others”

This takes the ambiguity away and gets them focusing on one topic, again with an easily measurable response.

3. Double barreled questions

These questions usually ask the customer to provide an opinion on two topics (usually loosely related), but only provides an opportunity for one response.

These can be another example of organizations making a common, unintentional mistake, in a desire to know more information they end up doubling up their questions.

Examples

“How satisfied are you with our customer service and aftercare?”

While at first glance these topics look related, they’re actually two very different topics altogether.

The customer may have had excellent customer service but found their aftercare package terrible. The question doesn’t allow for them to differentiate between the two and give two opinions.

”Would you like our product to be cheaper and more value for money?”

Again, as with the above example, these are really two separate topics. “Value for money” can mean different things to different people.

While some might find it synonymous with “cheap”, others will expect value to represent a high cost but a more high-quality product. Again, you’ll be skewing your answers based on people's objective interpretation of the statement.

Consequences

You’ll confuse your customers and get skewed results. The worst case scenario would be that you end up annoying them by not giving them a chance to respond separately.

What to do instead

Never combine two questions in one. The simple solution is to split the questions into two:

“How satisfied were you with our customer service?”

“How satisfied were you with our customer aftercare?”

4. Absolute Questions

These questions can bias your respondents' choices by forcing them into an absolute categorical response when they might not have one.

They use words like

- Never

- Always

- All

You’re essentially asking the customer to be 100% certain about something.

Examples of Biased Absolute Questions

“Do you always use product X for your cleaning needs?”

The problem with this is that the answer will be no.

The chances of someone using your product 100% of the time are going to be very slim and will reflect poorly on your survey results.

“Tell us why you have never purchased our product”

This question not only isolates the respondent by singling them out as not buying your product, it also comes off as aggressive and pushy. You’re leaving your customer very little room to maneuver.

Consequences

These are usually too inflexible to be used in a survey, if you don’t give the respondents the chance to opt out of a question they’ll either be forced to answer with something that doesn’t apply to them (Skewing your results again) or abandon the survey.

If the questions themselves sound aggressive thanks to the absolute language used, the customer might in turn be encouraged to respond aggressively themselves. This results in getting plenty of negative feedback.

What to do instead

Never use absolutes (and yes, we’re aware of the irony of using an absolute to get our point across!).

Use specific options instead so customers have a choice:

“What discourages you from purchasing our product?

- The price is too high

- The quality is not good

- I wasn't aware of the product

- Other

- I don't want to answer

5. Acquiescence Bias Questions

These questions are usually a binary yes/no choice.

The questions are worded so that the respondent is more likely to respond positively to every question in the survey, simply clicking “yes” or “agree” to speed through the survey even if they don’t completely agree with the statement.

People are often more likely to respond positively when only two options are presented.

Examples of Biased Survey Questions

“Is our level of customer service satisfactory? - Yes/No”

It's much more likely a respondent will find your customer service somewhere in between the two extremes of yes and no, but because they’ve probably had more good experiences than bad, they’ll simply click “yes” and move on. There’s no option to add any nuance or detail to the question.

“Our product is easy to use” - Agree/Disagree”

As above, it's much more likely the customer was able to get your product working and didn’t experience any major problems, again, this will make them naturally hit agree. Only those customers that have had a completely terrible experience or found the product totally unusable are going to disagree.

Consequences

You’ll ultimately learn nothing of value from these types of questions.

Your entire survey will essentially be a waste of time and money. You’ll end up with an “Everything seems fine” scenario, because no-one is providing you with any genuinely valuable feedback as they're too busy agreeing with all of your statements.

What to Do Instead

Another simple one, just don’t use yes/no or agree/disagree questions. Always offer multiple options for the customer to select from or provide an open form text box.

While we’ve given you a few tips on avoiding biased questions, if you'd like to know more about

- writing effective survey questions,

- or how to craft the best survey subject lines

We’ve written guides that cover some key dos and don’ts.

The key thing is to keep the questions focused on one specific topic, never lead your customers on and keep things simple.

Once you get positive responses, remember to respond to them!

Next Steps

First of all, read our ultimate guide to surveys that covers everything from best survey methods to survey data analysis.

Below you can see an embedded survey that's done with Trustmary's own survey tool and drag and drop survey-maker.